Below you will find pages that utilize the taxonomy term “Testing”

Quick Testing Tips: One Class, One Test?

I’ve mentioned this several times without explaining: the rule that every class should have a test, or that every class method should have a test, does not make sense at all. Still, it’s a rule that many teams follow. Why? Maybe they used to have a #NoTest culture and they never want to go back to it, so they establish a rule that is easy to enforce. When reviewing you only have to check: does the class have a test? Okay, great. It’s a bad test? No problem, it is a test. I already explained why I think you need to make an effort to not just write any test, but to write good tests (see also: Testing Anything; Better Than Testing Nothing?). In this article I’d like to look closer at the numbers: one class - one test.

Quick Testing Tips: Write Unit Tests Like Scenarios

I’m a big fan of the BDD Books by Gáspár Nagy and Seb Rose, and I’ve read a lot about writing and improving scenarios, like Specification by Example by Gojko Adzic and Writing Great Specifications by Kamil Nicieja. I can recommend reading anything from Liz Keogh as well. Trying to apply their suggestions in my development work, I realized: specifications benefit from good writing. Writing benefits from good thinking. And so does design. Better writing, thinking, designing: this will make us do a better job at programming. Any effort put into these activities has a positive impact on the other areas, even on the code itself.

Quick Testing Tips: Testing Anything; Better Than Testing Nothing?

“Yes, I know. Our tests aren’t perfect, but it’s better to test anything than to test nothing at all, right?”

Let’s look into that for a bit. We’ll try the “Fowler Heuristic” first:

One of my favourite (of the many) things I learned from consulting with Martin Fowler is that he would often ask “Compared to what?”

- Agile helps you ship faster!

- Compared to what?

[…]

Often there is no baseline.

Quick Testing Tips: Self-Contained Tests

Whenever I read a test method I want to understand it without having to jump around in the test class (or worse, in dependencies). If I want to know more, I should be able to “click” on one of the method calls and find out more.

I’ll explain later why I want this, but first I’ll show you how to get to this point.

As an example, here is a test I encountered recently:

Don't test constructors

@ediar asked me on Twitter if I still think a constructor should not be tested. It depends on the type of object you’re working with, so I think it’ll be useful to elaborate here.

Would you test the constructor of a service that just gets some dependencies injected? No. You’ll test the behavior of the service by calling one of its public methods. The injected dependencies are collaborating services and the service as a whole won’t work if anything went wrong in the constructor.

Testing your controllers when you have a decoupled core

A lot can happen in 9 years. Back then I was still advocating that you should unit-test your controllers and that setter injection is very helpful when replacing controller dependencies with test doubles. I’ve changed my mind: constructor injection is the right way for any service object, including controllers. And controllers shouldn’t be unit tested, because:

- Those unit tests tend to be a one-to-one copy of the controller code itself. There is no healthy distance between the test and the implementation.

- Controllers need some form of integrated testing, because by zooming in on the class-level, you don’t know if the controller will behave well when the application is actually used. Is the routing configuration correct? Can the framework resolve all of the controller’s arguments? Will dependencies be injected properly? And so on.

The alternative I mentioned in 2012 is to write functional tests for your controller. But this is not preferable in the end. These tests are slow and fragile, because you end up invoking much more code than just the domain logic.

Unit test naming conventions

Recently I received a question; if I could explain these four lines:

/**

* @test

*/

public function it_works_with_a_standard_use_case_for_command_objects(): void

The author of the email had some great points.

-

For each, my test I should write +3 new line of code instead write,

public functiontestItWorksWithAStandardUseCaseForCommandObjects():void -

PSR-12 say that “Method names MUST be declared in camelCase”. [The source of this is actually PSR-1].

-

In PHPUnit documentation author say “The tests are public methods that are named test*” and left example below

Functional tests, and speeding up the schema creation

When Symfony2 was created I first learned about the functional test, which is an interesting type of test where everything about your application is as real as possible. Just like with an integration or end-to-end test. One big difference: the test runner exercises the application’s front controller programmatically, instead of through a web server. This means that the input for the test is a Request object, not an actual HTTP message.

Is not writing tests unprofessional?

Triggered by Marco Pivetta who apparently said during his talk at Symfony Live Berlin: “If you still don’t have tests, this is unprofessional”, I thought I’d tweet about that too: “It’s good for someone to point this out from time to time”.

I don’t like it when a blog post is about tweets, just as I don’t like it when a news organization quotes tweets; as if they are some important source of wisdom. But since this kind of tweet tends to invoke many reactions (and often emotionally charged ones too), I thought it would be smart to get the discussion off Twitter and write something more nuanced instead.

Test-driving repository classes - Part 2: Storing and retrieving entities

In part 1 of this short series (it’s going to end with this article) we covered how you can test-drive the queries in a repository class. Returning query results is only part of the job of a repository though. The other part is to store objects (entities), retrieve them using something like a save() and a getById() method, and possibly delete them. Some people will implement these two jobs in one repository class, some like to use two or even many repositories for this. When you have a separate write and read model (CQRS), the read model repositories will have the querying functionality (e.g. find me all the active products), the write model repositories will have the store/retrieve/delete functionality.

Test-driving repository classes - Part 1: Queries

There’s something I’ve only been doing since a year or so, and I’ve come to like it a lot. My previous testing experiences were mostly at the level of unit tests or functional/system tests. What was left out of the equation was integration tests. The perfect example of which is a test that proves that your custom repository class works well with the type of database that you use for the project, and possibly the ORM or database abstraction library you use to talk with that database. A test for a repository can’t be a unit test; that wouldn’t make sense. You’d leave a lot of assumptions untested. So, no mocking is allowed.

About fixtures

System and integration tests need database fixtures. These fixtures should be representative and diverse enough to “fake” normal usage of the application, so that the tests using them will catch any issues that might occur once you deploy the application to the production environment. There are many different options for dealing with fixtures; let’s explore some of them.

Generate fixtures the natural way

The first option, which I assume not many people are choosing, is to start up the application at the beginning of a test, then navigate to specific pages, submit forms, click buttons, etc. until finally the database has been populated with the right data. At that point, the application is in a useful state and you can continue with the act/when and assert/then phases. (See the recent article “Pickled State” by Robert Martin on the topic of tests as specifications of a finite state machine).

Testing actual behavior

The downsides of starting with the domain model

All the architectural focus on having a clean and infrastructure-free domain model is great. It’s awesome to be able to develop your domain model in complete isolation; just a bunch of unit tests helping you design the most beautiful objects. And all the “impure” stuff comes later (like the database, UI interactions, etc.).

However, there’s a big downside to starting with the domain model: it leads to inside-out development. The first negative effect of this is that when you start with designing your aggregates (entities and value objects), you will definitely need to revise them when you end up actually using them from the UI. Some aspects may turn out to be not so well-designed at all, and will make no sense from the user’s perspective. Some functionality may have been designed well, but only theoretically, since it will never actually be used by any real client, except for the unit test you wrote for it.

Book review: Fifty quick ideas to improve your tests - Part 2

This article is part 2 of my review of the book “Fifty quick ideas to improve your tests”. I’ll continue to share some of my personal highlights with you.

Replace multiple steps with a higher-level step

If a test executes multiple tasks in sequence that form a higher-level action, often the language and the concepts used in the test explain the mechanics of test execution rather than the purpose of the test, and in this case the entire block can often be replaced with a single higher-level concept.

Book review: Fifty quick ideas to improve your tests - Part 1

Review

After reading “Discovery - Explore behaviour using examples” by Gáspár Nagy and Seb Rose, I picked up another book, which I bought a long time ago: “Fifty Quick Ideas to Improve Your Tests” by Gojko Adzic, David Evans, Tom Roden and Nikola Korac. Like with so many books, I find there’s often a “right” time for them. When I tried to read this book for the first time, I was totally not interested and soon stopped trying to read it. But ever since Julien Janvier asked me if I knew any good resources on how to write good acceptance test scenarios, I kept looking around for more valuable pointers, and so I revisited this book too. After all, one of the author’s of this book - Gojko Adzic - also wrote “Bridging the communication gap - Specification by example and agile acceptance testing”, which made a lasting impression on me. If I remember correctly, the latter doesn’t have too much practical advice on writing goods tests (or scenarios), and it was my hope that “Fifty quick ideas” would.

Book review: Discovery - Explore behaviour using examples

I’ve just finished reading “Discovery - Explore behaviour using examples” by Gáspár Nagy and Seb Rose. It’s the first in a series of books about BDD (Behavior-Driven Development). The next parts are yet to be written/published. Part of the reason to pick up this book was that I’d seen it on Twitter (that alone would not be a sufficient reason of course). The biggest reason was that after delivering a testing and aggregate design workshop, I noticed that my acceptance test skills aren’t what they should be. After several years of not working as a developer on a project for a client, I realized again that (a quote from the book):

Mocking the network

In this series, we’ve discussed several topics already. We talked about persistence and time, the filesystem and randomness. The conclusion for all these areas: whenever you want to “mock” these things, you may look for a solution at the level of programming tools used (use database or filesystem abstraction library, replace built-in PHP functions, etc.). But the better solution is always: add your own abstraction. Start by introducing your own interface (including custom return types), which describes exactly what you need. Then mock this interface freely in your application. But also provide an implementation for it, which uses “the real thing”, and write an integration test for just this class.

Mocking at architectural boundaries: the filesystem and randomness

In a previous article, we discussed “persistence” and “time” as boundary concepts that need mocking by means of dependency inversion: define your own interface, then provide an implementation for it. There were three other topics left to cover: the filesystem, the network and randomness.

Mocking the filesystem

We already covered “persistence”, but only in the sense that we sometimes need a way to make in-memory objects persistent. After a restart of the application we should be able to bring back those objects and continue to use them as if nothing happened.

Mocking at architectural boundaries: persistence and time

More and more I’ve come to realize that I’ve been mocking less and less.

The thing is, creating test doubles is a very dangerous activity. For example, what I often see is something like this:

$entityManager = $this->createMock(EntityManager::class);

$entityManager->expects($this->once())

->method('persist')

->with($object);

$entityManager->expects($this->once())

->method('flush')

->with($object);

Or, what appears to be better, since we’d be mocking an interface instead of a concrete class:

$entityManager = $this->createMock(ObjectManagerInterface::class);

// ...

To be very honest, there isn’t a big different between these two examples. If this code is in, for example, a unit test for a repository class, we’re not testing many of the aspects of the code that should have been tested instead.

Local and remote code coverage for Behat

Why code coverage for Behat?

PHPUnit has built-in several options for generating code coverage data and reports. Behat doesn’t. As Konstantin Kudryashov (@everzet) points out in an issue asking for code coverage options in Behat:

Code coverage is controversial idea and code coverage for StoryBDD framework is just nonsense. If you’re doing code testing with StoryBDD - you’re doing it wrong.

He’s right I guess. The main issue is that StoryBDD isn’t about code, so it doesn’t make sense to calculate code coverage for it. Furthermore, the danger of collecting code coverage data and generating coverage reports is that people will start using it as a metric for code quality. And maybe they’ll even set management targets based on coverage percentage. Anyway, that’s not what this article is about…

Convincing developers to write tests

Unbalanced test suites

Having spoken to many developers and development teams so far, I’ve recognized several patterns when it comes to software testing. For example:

- When the developers use a framework that encourages or sometimes even forces them to marry their code to the framework code, they only write functional tests - with a real database, making (pseudo) HTTP requests, etc. using the test tools bundled with the framework (if you’re lucky, anyway). For these teams it’s often too hard to write proper unit tests. It takes too much time, or is too difficult to set up.

- When the developers use a framework that enables or encourages them to write code that is decoupled from the framework, they have all these nice, often pretty abstract, units of code. Those units are easy to test. What often happens is that these teams end up writing only unit tests, and don’t supply any tests or “executable specifications” proving the correctness of the behavior of the application at large.

- Almost nobody writes proper acceptance tests. That is, most tests that use a BDD framework, are still solely focusing on the technical aspects (verifying URLs, HTML elements, rows in a database, etc.), while to be truly beneficial they should be concerned about application behavior, defined in a ubiquitous language that is used by both the technical staff and the project’s stakeholders.

Please note that these are just some very rough conclusions, there’s nothing scientific about them. It would be interesting to do some actual research though. And probably someone has already done this.

A better PHP testing experience Part II: Pick your test doubles wisely

In the introduction to this series I mentioned that testing object interactions can be really hard. Most unit testing tutorials cover this subject by introducing the PHPUnit mocking sub-framework. The word “mock” in the context of PHPUnit is given the meaning of the general concept of a “test double”. In reality, a mock is a very particular kind of test double. I can say after writing lots of unit tests for a couple of years now that my testing experience would have definitely been much better if I had known about the different kinds of test doubles that you can use in unit tests. Each type of test double has its own merits and it is vital to the quality of your test suite that you know when to use which one.

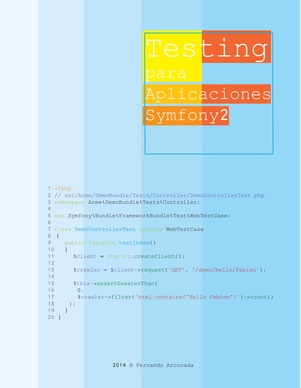

The PHP testing experience: Interview by Fernando Arconada

Fernando Arconada interviewed me about the subject of testing. He is writing a book about testing Symfony2 applications: Testing para Aplicaciones Symfony2. Fernando will translate this interview to Spanish and and add it to his book, together with the articles in my A better PHP testing experience series.

Fernando Arconada interviewed me about the subject of testing. He is writing a book about testing Symfony2 applications: Testing para Aplicaciones Symfony2. Fernando will translate this interview to Spanish and and add it to his book, together with the articles in my A better PHP testing experience series.

Who is Matthias Noback?

I’m a PHP developer, writer and speaker. I live in Zeist, The Netherlands, with my girlfriend, a son of 9 and our newborn daughter. Currently I have my own business, called Noback’s Office. This really gives me a lot of freedom: I work as a developer on one project for about half of the week and in the remaining time I can either spend some time with my family or write blog posts, or finish my second book.

A better PHP testing experience Part I: Moving away from assertion-centric unit testing

In the introduction article of this series I quickly mentioned that I think unit testing often focuses too much on assertions. The historic reason for this is that in introductory articles and workshops it is often said that:

- You are supposed to pick the most specific assertion the testing framework offers.

- You are supposed to have only one assertion in each test method.

- You are supposed to write the assertion first, since that is the goal you are working towards.

I used to preach these things myself too (yes, “development with tests” often comes with a lot of preaching). But now I don’t follow these rules anymore. I will shortly explain my reasons. But before I do, let’s take a step back and consider something that is known as the Test framework in a tweet, by Mathias Verraes. It looks like this:

A better PHP testing experience: Introduction

This is the introduction to a series of articles related to what I call: the “PHP testing experience”. I must say I’m not really happy with it. And so are many others I think. In the last couple of years I’ve met many developers who experienced a lot of trouble while trying to make testing a serious part of their development workflow. It is, I admit, a hard thing to accomplish. I see many people fail at it. Either the learning curve is too steep for them or they are lacking some insight into the concepts and reasoning behind testing. This has unfortunately led many of them to stop trying.

Test Symfony2 commands using the Process component and asynchronous assertions

A couple of months ago I wrote about console commands that create a PID file containing the process id (PID) of the PHP process that runs the script. It is very usual to create such a PID file when the process forks itself and thereby creates a daemon which could in theory run forever in the background, with no access to terminal input or output. The process that started the command can take the PID from the PID file and use it to determine whether or not the daemon is still running.

Symfony2 & TDD: Testing a Configuration Class

Some of you may already know: I am a big fan of TDD - the real TDD, where you start with a failing test, and only write production code to make that specific test pass (there are three rules concerning this actually).

The TDD approach usually works pretty well for most classes in a project. But there are also classes which are somewhat difficult to test, like your bundle’s extension class, or its configuration class. The usual approach is to skip these classes, or to test them only using integration tests, when they are used in the context of a running application. This means you won’t have all the advantages of unit tests for these “units of code”. Some of the execution paths of the code in these classes may never be executed in a test environment, so errors will be reported when the code is running in production already.

PHPUnit & Pimple: Integration Tests with a Simple DI Container

Unit tests are tests on a micro-scale. When unit testing, you are testing little units of your code to make sure that, given a certain input, they produce the output you expected. When your unit of code makes calls to other objects, you can “mock” or “stub” these objects and verify that a method is called a specific number of times, or to make sure the unit of code you’re testing will receive the correct data from the other objects.

Symfony2: Testing Your Controllers

Apparently not everyone agrees on how to unit test their Symfony2 controllers. Some treat controller code as the application’s “glue”: a controller does the real job of transforming a request to a response. Thus it should be tested by making a request and check the received response for the right contents. Others treat controller code just like any other code - which means that every path the interpreter may take, should be tested.

How to Install Sismo

So, as easy as Fabien makes this look like, in my case it wasn’t that easy to get Sismo (his small yet very nice personal continuous integration “server”) up and running on my local machine. These were the steps I had to take:

-

Create a directory for Sismo, e.g.

/Users/matthiasnoback/Sites/sismo -

Download sismo.php and copy the file to the directory you have just created

-

Create a

VirtualHostfor Sismo (for example, usesismo.localas a server name

PHPUnit: Writing a Custom Assertion

When you see yourself repeating a number of assertions in your unit tests, or you have to think hard each time you make some kind of assertion, it’s time to create your own assertions, which wraps these complicated assertions into one single method call on your TestCase class. In the example below, I will create a custom assertion which would recognize the following JSON string as a “successful response”:

{"success":true}

Inside a TestCase we could run the following lines of code to verify the successfulness of the given JSON response string:

Silex: set up your project for testing with PHPUnit

In my previous post I wrote down a set of requirements for Silex applications. There were a few things left for another post: first of all, I want to have unit tests, nicely organized in directories that correspond to the namespaces of my project’s classes. This means that the tests for Acme\SomeNamespace\SomeClass should be found in Acme\Tests\SomeNamespace\SomeClass.

Organizing your unit tests

I want to write my tests for the PHPUnit framework. This allows me to use some PHPUnit best practices: first of all we define our PHPUnit configuration file in /app/phpunit.xml.

First of all we point PHPUnit to a bootstrap file (of which we will later define it’s contents). We also set an environment variable called “env” whose value is “test”. Finally we set the location of our app.php file inside the server variable “env”.

PHPUnit: create a ResultPrinter for output in the browser

PHPUnit 3.6 allows us to create our own so-called ResultPrinters. Using such a printer is quite necessary in the case of running your unit tests from within the browser (see my previous post), since we don’t print to a console, but to a screen. You can make this all as nice as you like, but here is the basic version of it.

Create the HtmlResultPrinter

First create the file containing your (for example) HtmlResultPrinter, for example in /src/Acme/DemoBundle/PHPUnit/HtmlResultPrinter.

Symfony2: running PHPUnit from within a controller

When you don’t have access to the command-line of your webserver, it may be nice to still run all your unit tests; so you need a way to execute the phpunit command from within a controller. This way, you can call your test suite by browsing to a URL of your site. To do things right, we start with a “test” controller /web/app_test.php containing these lines of code:

if (!in_array(@$_SERVER['REMOTE_ADDR'], array(

'127.0.0.1',

'::1',

))) {

header('HTTP/1.0 403 Forbidden');

exit('You are not allowed to access this file. Check '.basename(__FILE__).' for more information.');

}

require_once __DIR__.'/../app/bootstrap.php.cache';

require_once __DIR__.'/../app/AppKernel.php';

use Symfony\Component\HttpFoundation\Request;

$kernel = new AppKernel('test', true);

$kernel->loadClassCache();

$kernel->handle(Request::createFromGlobals())->send();

In fact, just copy everything from /web/app_dev.php but replace the string “dev” by “test”.

Symfony2: use a bootstrap file for your PHPUnit tests and run some console commands

Please note: There are better solutions to accomplish what I describe in this article. I’d like to recommend the ICBaseTestBundle here, which automatically creates a database for you and loads fixture data into it.

One of the beautiful things about PHPUnit is the way it can be easily configured: for example you can alter the paths in which PHPUnit will look for TestCase classes. In your Symfony2 project this can be done in the file /app/phpunit.xml (if it is called phpunit.xml.dist, rename it to phpunit.xml first!). This file contains a default configuration which suffices in most situations, but in case your TestCase classes are housed somewhere else than in your *Bundle/Test, add an extra “directory” to the “testsuite” element in phpunit.xml: